Decade ago data was just an entity which helped business to operate smoothly. By then data was considered as some sort of business related information just stored in a database, which can be retrieved based on the demand/requirement as per the demand. E.g: a bunch of products, transactions such as invoices, receipts etc. or customer details.

But today data has become an important entity, which drives business towards success. In today’s fast-moving world, companies who owned data and does analytics has become the most successful companies.

However one of the major concerns we have today is how to protect these data. Especially the sensitive ones. Since more data is being exposed to the cloud, it’s essential to protect it from going to the wrong hands and it has become a major problem since hackers nowadays are well equipped and are always on the look for stealing this valuable information whenever possible, since it’ll be a valuable asset in the open market.

But protecting the data from unauthorized access is a must. Failing to do so can have unexpected consequences. Entire business could get wiped out of the business due to this. Hence enterprises should seriously consider protecting their data and we will discuss how we can achieve this in SQL Server through data encryption.

Ways of Data Encryption in SQL Server

There are few ways of encrypting data in SQL Server. We will discuss the advantages and disadvantages of each method.

SQL Server provides following methods to encrypt data:

- T-SQL Functions

- Using Symmetric Keys**

- Using Asymmetric Keys**

- Using Certificates**

- Transparent Data Encryption**

**Note : In this article I only plan to explain encryption/decryption functionality using T-SQL. I will talk about other methods which is mentioned about in future articles.

Using T-SQL Functions

Encrypting data using ENCRYPTBYPASSPHRASE

Encryption is done using T-SQL function ENCRYPTBYPASSPHRASE.

ENCRYPTBYPASSPHRASE(passphrase,text_to_encrypt)

The first parameter is the passphrase which can be any text or a variable of type NVARCHAR, CHAR, VARCHAR, BINARY, VARBINARY, or NCHAR. The function uses this passphrase to generate a symmetric key.

For the illustration purpose we will create a table which to hold employee details

CREATE TABLE dbo.Employee(

Id INT

,EmpName VARCHAR(100)

,EmpDOB SMALLDATETIME

,SSN VARBINARY(128)

)

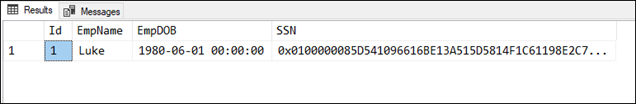

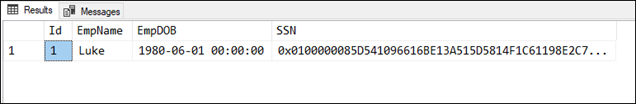

This example is to demonstrate the data encryption during INSERT DML Statement

INSERT INTO dbo.Employee(

Id

,EmpName

,EmpDOB

,SSN

)

VALUES(

1

,'Luke'

,'01-June-1980'

,ENCRYPTBYPASSPHRASE('Pa$$W0rd4EnCRyPt10n','111-22-3333')

)

Further details can be found in the Microsoft Documentation:

https://docs.microsoft.com/en-us/sql/t-sql/functions/encryptbypassphrase-transact-sql

Decrypting data using T-SQL function DECRYPTBYPASSPHRASE

Will take the same details which we inserted during the first case. The encrypted data can be decrypted using SQL function DECRYPTBYPASSPHRASE. If any attempt has been made to decrypt the data without using DECRYPTBYPASSPHRASE nor providing the proper details, it will fail the operation.

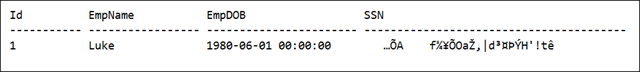

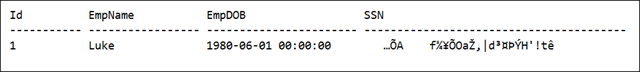

Without Decryption

SELECT

Id,EmpName,EmpDOB,CONVERT(VARCHAR(128),SSN) AS SSN

FROM

dbo.Employee

WHERE

Id = 1

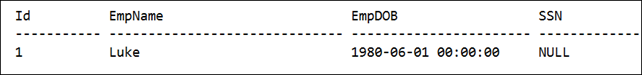

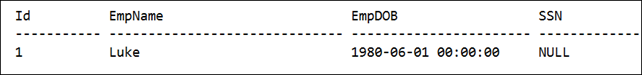

With Decryption (Incorrect Pass-phrase)

SELECT

Id

,EmpName

,EmpDOB

,DECRYPTBYPASSPHRASE('IncorrectPassword',SSN ) AS SSN

FROM

dbo.Employee

WHERE

Id = 1

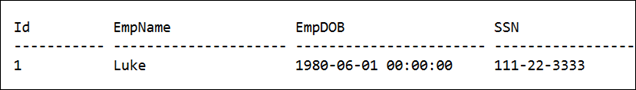

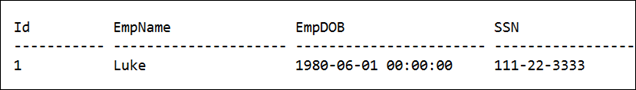

But providing the correct pass-phrase will return the correct details

SELECT

Id

,EmpName

,EmpDOB

,CONVERT(VARCHAR(128),DECRYPTBYPASSPHRASE('Pa$$W0rd4EnCRyPt10n',SSN )) AS SSN

FROM

dbo.Employee

WHERE

Id = 1

However there could be a requirement which you need to protect your data, not from stealing, but from getting updated with someone else’s.

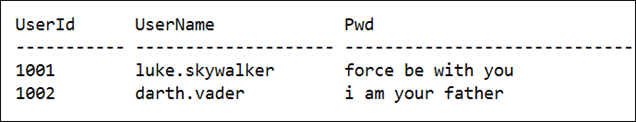

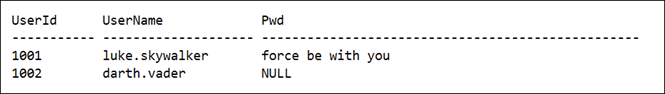

One classic example is a login table. Suppose we have a table which stores login credentials, which is having the following structure.

*Note: In real world cases, usually it’s more secure if you hash passwords rather than encrypting them. But I am using encryption for illustration purpose.

So if a person has access to update the details on the password column, he/she can easily replace the contents with their own and log on using that. This can be stopped by providing two additional values when details are inserted to the table using ENCRYPTPASSPHRASE.

CREATE TABLE dbo.LoginCredentails(

UserId INT

,UserName VARCHAR(20)

,Pwd VARBINARY(128)

)

We will insert two records to the above created table.

INSERT INTO dbo.LoginCredentails(

UserId

,UserName

,Pwd

)

VALUES

(1001,'luke.skywalker',ENCRYPTBYPASSPHRASE('Pa$$W0rd4EnCRyPt10n','force be with you',1,CAST(1001 AS sysname)))

,(1002,'darth.vader',ENCRYPTBYPASSPHRASE('Pa$$W0rd4EnCRyPt10n','i am your father',1,CAST(1002 AS sysname)))

Please note that unlike the previous example, we are now providing two additional values to the ENCRYPTBYPASSPHRASE function. The first values is 1, which indicates whether whether an authenticator will be encrypted together with the password. If the value is 1 and authenticator will be added. The second value is the data which from which to derive an authenticator. In this example we will use a value similar to a user id, so that when the value is decrypted, we could use the same value.

Following is a function to fetch the decrypted password based on the UserId. Assume we will be using this when validating the credential prior login.

CREATE FUNCTION Fn_GetUserPwdById(@UserId AS INT)

RETURNS VARCHAR(50)

AS

BEGIN

DECLARE @Pwd AS VARCHAR(50)

SELECT

@Pwd = CONVERT(VARCHAR(50),DECRYPTBYPASSPHRASE('Pa$$W0rd4EnCRyPt10n',LC.Pwd,1,CAST(LC.UserId AS sysname)))

FROM

dbo.LoginCredentails AS LC

WHERE

LC.UserId = @UserId

RETURN @Pwd

END

Using the aforementioned function we will retrieve the details.

SELECT

UserId

,UserName

,dbo.Fn_GetUserPwdById(UserId) AS Pwd

FROM

dbo.LoginCredentails

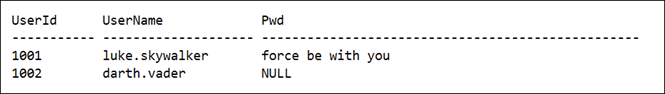

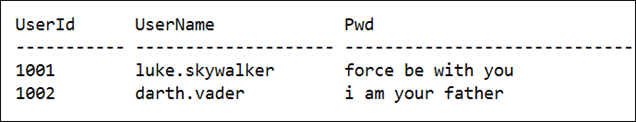

But querying the data simply will get you the binary string of the encrypted value.

SELECT

UserId

,UserName

,Pwd

FROM

dbo.LoginCredentails

Suppose if a person has enough privileges to do an update the password with a known one (from an existing user) it’ll allow him/her to login to the system impersonating any user.

UPDATE LC SET LC.Pwd = (

SELECT LC2.Pwd FROM dbo.LoginCredentails AS LC2

WHERE LC2.UserName = 'luke.skywalker'

)

FROM dbo.LoginCredentails AS LC

WHERE

LC.UserName = 'darth.vader'

But if when the same function is used for decryption, it will return NULL for the updated record, preventing the login to be invalid if it’s replaced using an existing one.

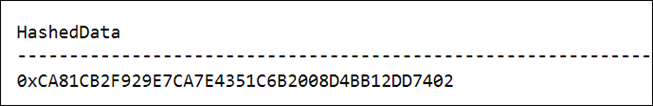

Hashing data using HASBYTESApart from the above mentioned function, there’s another function which can be used to hash data. Unlike encrypting, there’s no way you can reverse the hashed data and see the raw details.

Syntax:

HASHBYTES ( 'algorithm', { @input | 'input' } )

/*

algorithm::= MD2 | MD4 | MD5 | SHA | SHA1 | SHA2_256 | SHA2_512

*/

There are two parameters which you require to provide. The first parameter is the algorithm which should be used for hashing. The hashing algorithm can be any of the following:

- MD4

- MD5

- SHA

- SHA1

- SHA2_256

- SHA2_512

The second parameter is the input, which needs to be hashed. This can be either a character or binary string.

The return value is VARBINARY(n). n = maximum 8000 bytes.

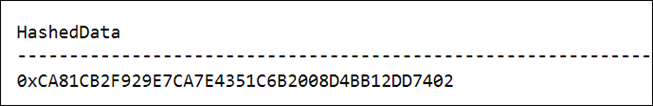

Example:

DECLARE

@TextToHash AS NVARCHAR(1000) = N'My Secret Message'

SELECT HASHBYTES('SHA1',@TextToHash) AS HashedData

Further details can be found in the Microsoft Documentation:

https://docs.microsoft.com/en-us/sql/t-sql/functions/hashbytes-transact-sql

Hope this might be useful to you and please feel free to comment your ideas.