When it comes to modern web development, JSON is one of the well known technologies when you require to exchange information between different applications. Before JSON, XML was used (and still being used in various applications and technologies) to do this job. But compared to XML, JSON is less verbose (like XML, JSON doesn’t have a closing tag), and it will make the JSON data somewhat smaller in size, ultimately making the data flow much faster. Perhaps the most significant advantage that JSON has over XML is that JSON is a subset of JavaScript, so code to parse and package it fits very naturally into JavaScript code. This seems highly beneficial for JavaScript programs and that happens to be a good reason for JSON to be very popular among web application developers.

However using XML or JSON is up to the personal preference and the requirement.

Prior to SQL Server 2016, there’s wasn’t any support for JSON in the earlier editions. So native JSON support is one of the new features which Microsoft introduced in SQL Server 2016.

Prior to SQL Server 2016 there are other databases which supports JSON.

- MongoDB

- CouchDB

- eXistDB

- Elastisearch

- BaseX

- MarkLogic

- OrientDB

- Oracle Database

- PostgresSQL

- Riak

But my main focus in this post will be the JSON support in SQL Server 2016.

In order to support JSON, in SQL 2016 following in built functions have been introduced:

- ISJSON

- JSON_VALUE

- JSON_QUERY

- JSON_MODIFY

- OPENJSON

- FOR JSON

There’s no specific data type in SQL Server to be used for JSON (unlike XML). You have to use NVARCHAR when you interact with JSON in SQL Server.

This is how we assign JSON data to a variable.

DECLARE @varJData AS NVARCHAR(4000)

SET @varJData =

N'{

"OrderInfo":{

"Tag":"#ONLORD_12546_45634",

"HeaderInfo":{

"CustomerNo":"CUS0001",

"OrderDate":"04-Jun-2016",

"OrderAmount":1200.00,

"OrderStatus":"1",

"Contact":["+0000 000 0000000000", "info@abccompany.com", "finance@abccompany.com"]

},

"LineInfo":[

{"ProductNo":"P00025", "Qty":3, "Price":200},

{"ProductNo":"P12548", "Qty":2, "Price":300}

]

}

}'

We will look closely how the aforementioned functions can be used with some sample

ISJSON()

As the name implies ISJSON functions is used to validate a given JSON string. The function will return in INT value and If the provided string is properly formatted as JSON it will return 1 else it will return 0.

Eg:

SELECT ISJSON(@varJData)

JSON_VALUE()

JSON_VALUE function can be used to return a scalar value from a JSON string.

Eg:

DECLARE @varJData AS NVARCHAR(4000)

SET @varJData =

N'{

"OrderInfo":{

"Tag":"#ONLORD_12546_45634",

"HeaderInfo":{

"CustomerNo":"CUS0001",

"OrderDate":"04-Jun-2016",

"OrderAmount":1200.00,

"OrderStatus":"1",

"Contact":["+0000 000 0000000000", "info@abccompany.com", "finance@abccompany.com"]

},

"LineInfo":[

{"ProductNo":"P00025", "Qty":3, "Price":200},

{"ProductNo":"P12548", "Qty":2, "Price":300}

]

}

}'

SELECT JSON_VALUE(@varJData,'$.OrderInfo.Tag')

SELECT JSON_VALUE(@varJData,'$.OrderInfo.HeaderInfo.CustomerNo')

Please note that the provided key is case sensitive and instead of ‘Tag’ if you pass ‘tag’ it will return a NULL since the function cannot find the key.

SELECT JSON_VALUE(@varJData,'$.OrderInfo.tag') /* This will returns NULL */

In such case if you require to see the exact error or the root cause, you need to specify ‘strict’ prior to the key. Eg:

SELECT JSON_VALUE(@varJData,'strict $.OrderInfo.tag') /* This will thorw an Error */

This will return the following error message instead of returning a NULL value.

Msg 13608, Level 16, State 1, Line 62

Property cannot be found on the specified JSON path.

Also JSON_VALUE can be used to fetch an element from a simple array (not an object array). In our sample JSON there are two arrays, which are ‘Contact’ and ‘LineInfo’, where the first being a simple string array and the latter is an object array.

Suppose if we require to fetch only the phone number from the contact details we can use the following query:

SELECT JSON_VALUE(@varJData,'$.OrderInfo.HeaderInfo.Contact[0]')

Also this can be used when we require to fetch an attribute from an array element as well. Suppose if we require to get the product number from the first element of the ‘LineInfo’ we could use:

SELECT JSON_VALUE(@varJData, '$.OrderInfo.LineInfo[0].ProductNo')

JSON_QUERY()

JSON_QUERY function is used when you require to extract an array of data or an object from a JSON.And we can extract the contact details and the line details which are arrays in this scenario as follows

SELECT JSON_QUERY(@varJData, '$.OrderInfo.HeaderInfo.Contact')

SELECT JSON_QUERY(@varJData, '$.OrderInfo.LineInfo')

And this can be used to fetch a certain element from an object array. Suppose if we want to fetch details for the second prodcut in the LineInfo section we can use the following:

SELECT JSON_QUERY(@varJData, '$.OrderInfo.LineInfo[1]')

**Note: If the JSON text contains duplicate properties - for example, two keys with the same name on the same level- the JSON_VALUE and JSON_QUERY functions will return the first value that matches the path.

JSON_MODIFY()

JSON_MODIFY function updates the value of a property in a JSON string and return the updated JSON string. The syntax for this function is as follows:

JSON_MODIFY(expression, path, new_value)

Using this function you can either Update, Insert, Delete or Append a value to the JSON string. We will see each of these operations using the above JSON string.

Updating an Exitsing Value

In order to update an existing value you need to provide the exact path followed by the value which should be updated to.

SET @varJData = JSON_MODIFY(@varJData,'$.OrderInfo.Tag','#NEWTAG_00001')

SELECT JSON_VALUE(@varJData,'$.OrderInfo.Tag')

Deleting an Existing Value

In order to delete an existing value you need to provide the exact path follwed by the value ‘NULL’.

SET @varJData = JSON_MODIFY(@varJData,'$.OrderInfo.Tag',NULL)

SELECT JSON_VALUE(@varJData,'$.OrderInfo.Tag')

PRINT @varJData

When the value is printed you can see that the ‘Tag’ attribute has been completely removed from the JSON string.

Inserting a Value

In order to insert an attribute along with a value you need to provide a path which isn’t currently availble in the JSON followed by the value. If the provided path is already present then the existing value will be replaced by the new value. The new value will always be added at the end of the existing JSON string.

SET @varJData = JSON_MODIFY(@varJData,'$.OrderInfo.Batch','#B_100000')

SELECT JSON_VALUE(@varJData,'$.OrderInfo.Batch')

PRINT @varJData

Appending a Value

In order to append an existing array in a JSON, you need to use ‘append’ before the path. Suppose if we require to add another element to the

SET @varJData = JSON_MODIFY(@varJData, 'append $.OrderInfo.HeaderInfo.Contact','+0010 111 1111111111')

SELECT JSON_QUERY(@varJData,'$.OrderInfo.HeaderInfo.Contact')

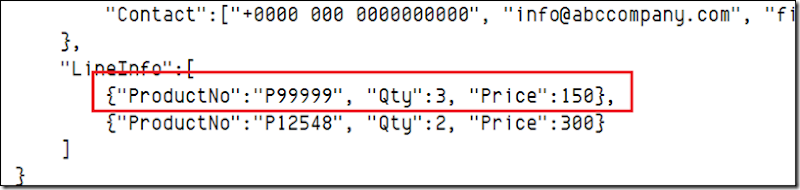

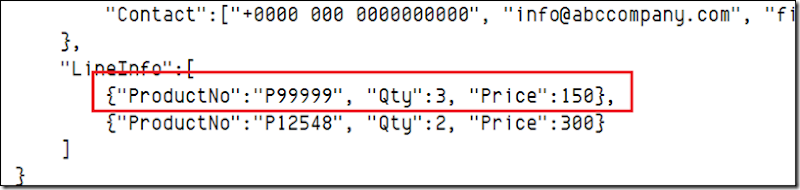

JSON_MODIFY can only manipulate a single value at a time. Therefore if the requirement is to change multiple values within a single query, you need to use JSON_MODIFY function multiple times. Suppose if we require to change the ‘ProductNo’ and the ‘Price’ of the first product in the ‘LineInfo’ we coud use the following syntax.

SET @varJData =

JSON_MODIFY(

JSON_MODIFY(@varJData,'$.OrderInfo.LineInfo[0].ProductNo','P99999')

,'$.OrderInfo.LineInfo[0].Price'

,150

)

FOR JSON

FOR JSON functionality is used When we are required to export SQL Tabular data as JSON data. This is very similar to the functionality of ‘FOR XML’. Each row will be formatted as a JSON object and values in cells will be generated as values of those respective JSON objects. Column names (or aliases) will be used as key names. Based on the options provided there are two variations in ‘FOR JSON’ usage.

1. FOR JSON AUTO - This will automatically create nested JSON sub arrays based on the table hierarchy used in the query. (similar to FOR XML AUTO)

2. FOR JSON PATH - This enables you to define the structure of output JSON using the column names/aliases. If you put dot-separated names in the column aliases, JSON properties will follow the naming convention. (This is similar to FOR XML PATH where you can use slash separated paths)

In order to illustrate the aforementioned concepts we need to prepare some sample data. Please use the following scripts to generate the necessary data.

--== Generate Required Schemas ==--

CREATE TABLE OrderHeader(

TAG VARCHAR(24)

,ORD_NO VARCHAR(10)

,CUST_NO VARCHAR(8)

,ORD_DATE DATE

,ORD_AMOUNT MONEY

,ORD_STATUS TINYINT

)

CREATE TABLE OrderLine(

ORD_NO VARCHAR(10)

,LINE_NO INT

,PROD_NO VARCHAR(8)

,ORD_QTY INT

,ITEM_PRICE MONEY

)

CREATE TABLE CustomerContact(

CONT_ID INT

,CUST_NO VARCHAR(8)

,CONTACT_DETAILS VARCHAR(24)

)

--== Insert Sample Data ==--

INSERT INTO dbo.OrderHeader(TAG,ORD_NO,CUST_NO,ORD_DATE,ORD_AMOUNT,ORD_STATUS)

VALUES('#ONLORD_12546_45634','ORD_1021','CUS0001','04-Jun-2016',1200.00,1)

INSERT INTO dbo.OrderLine(ORD_NO,LINE_NO,PROD_NO,ORD_QTY,ITEM_PRICE)

VALUES ('ORD_1021',1,'P00025',3,200.00), ('ORD_1021',1,'P12548',2,300.00)

INSERT INTO dbo.CustomerContact(CONT_ID, CUST_NO, CONTACT_DETAILS)

VALUES (1,'CUS0001','+0000 000 0000000000') ,(2,'CUS0001','info@abccompany.com'),(3,'CUS0001','finance@abccompany.com')

Extracting data as JSON using FOR JSON AUTO

SELECT

H.TAG

,H.ORD_NO

,H.CUST_NO

,H.ORD_DATE

,H.ORD_AMOUNT

,H.ORD_STATUS

,L.ORD_NO

,L.LINE_NO

,L.PROD_NO

,L.ORD_QTY

,L.ITEM_PRICE

FROM

dbo.OrderHeader AS H

JOIN dbo.OrderLine AS L

ON L.ORD_NO = H.ORD_NO

WHERE

H.ORD_NO = 'ORD_1021'

FOR JSON AUTO

You will get a similar result which is shown below:

[

{

"TAG":"#ONLORD_12546_45634",

"ORD_NO":"ORD_1021",

"CUST_NO":"CUS0001",

"ORD_DATE":"2016-06-04",

"ORD_AMOUNT":1200.0000,

"ORD_STATUS":1,

"L":[

{"ORD_NO":"ORD_1021","LINE_NO":1,"PROD_NO":"P00025","ORD_QTY":3,"ITEM_PRICE":200.0000},

{"ORD_NO":"ORD_1021","LINE_NO":1,"PROD_NO":"P12548","ORD_QTY":2,"ITEM_PRICE":300.0000}

]

}

]

As described previously ‘FOR JSON AUTO’ will simply convert the column names or aliases as keys and produce the JSON. Table aliases will be used to create sub arrays.

But we could get a similar resultset like what we had in our previous examples by tweaking the above select statement as follows:

SELECT

H.TAG AS Tag

,H.ORD_NO AS OrderNo

,H.CUST_NO AS CustNo

,H.ORD_DATE AS OrderDate

,H.ORD_AMOUNT AS OrderAmount

,H.ORD_STATUS AS OrderStatus

,LineInfo.ORD_NO AS [OrderNo]

,LineInfo.LINE_NO AS [LineNo]

,LineInfo.PROD_NO AS [ProdNo]

,LineInfo.ORD_QTY AS [Qty]

,LineInfo.ITEM_PRICE AS [ItemPrice]

FROM

dbo.OrderHeader AS H

JOIN dbo.OrderLine AS LineInfo

ON LineInfo.ORD_NO = H.ORD_NO

WHERE

H.ORD_NO = 'ORD_1021'

FOR JSON AUTO, ROOT ('OrderInfo')

Then we will be able to get the following JSON string.

{

"OrderInfo":[

{

"Tag":"#ONLORD_12546_45634",

"OrderNo":"ORD_1021",

"CustNo":"CUS0001",

"OrderDate":"2016-06-04",

"OrderAmount":1200.0000,

"OrderStatus":1,

"LineInfo":[

{"OrderNo":"ORD_1021","LineNo":1,"ProdNo":"P00025","Qty":3,"ItemPrice":200.0000},

{"OrderNo":"ORD_1021","LineNo":1,"ProdNo":"P12548","Qty":2,"ItemPrice":300.0000}

]

}

]

}

Extracting data as JSON using FOR JSON PATH

We can use the FOR JSON PATH functionality to format the output JSON the way we require easily. But there’s a restriction when we use ‘FOR JSON PATH’ to extract data, which is that you cannot have the same column name (or alias) duplicated among multiple columns. This will result in an error.

We will see how the details will be fetched using ‘FOR JSON PATH’

SELECT

H.TAG

,H.ORD_NO

,H.CUST_NO

,H.ORD_DATE

,H.ORD_AMOUNT

,H.ORD_STATUS

--,L.ORD_NO --If this line is uncommented it will throw an error

,L.LINE_NO

,L.PROD_NO

,L.ORD_QTY

,L.ITEM_PRICE

FROM

dbo.OrderHeader AS H

JOIN dbo.OrderLine AS L

ON L.ORD_NO = H.ORD_NO

WHERE

H.ORD_NO = 'ORD_1021'

FOR JSON PATH

We will get the following JSON result.

[

{

"TAG":"#ONLORD_12546_45634",

"ORD_NO":"ORD_1021",

"CUST_NO":"CUS0001",

"ORD_DATE":"2016-06-04",

"ORD_AMOUNT":1200.0000,

"ORD_STATUS":1,

"LINE_NO":1,

"PROD_NO":"P00025",

"ORD_QTY":3,

"ITEM_PRICE":200.0000

},

{

"TAG":"#ONLORD_12546_45634",

"ORD_NO":"ORD_1021",

"CUST_NO":"CUS0001",

"ORD_DATE":"2016-06-04",

"ORD_AMOUNT":1200.0000,

"ORD_STATUS":1,

"LINE_NO":1,

"PROD_NO":"P12548",

"ORD_QTY":2,

"ITEM_PRICE":300.0000

}

]

Advantage in using ‘FOR JSON PATH’ is that you have the ability to control the structure using the column names/aliases. When dot seperated aliases are used, JSON properties will follow the naming convention. Please consider the below query and the results.

SELECT

H.TAG AS 'HeaderInfo.Tag'

,H.ORD_NO AS 'HeaderInfo.OrderNo'

,H.CUST_NO AS 'HeaderInfo.CustNo'

,H.ORD_DATE AS 'HeaderInfo.OrderDate'

,H.ORD_AMOUNT AS 'HeaderInfo.OrderAmount'

,H.ORD_STATUS AS 'HeaderInfo.OrderStatus'

,L.ORD_NO AS 'LineInfo.OrderNo'

,L.LINE_NO AS 'LineInfo.LineNo'

,L.PROD_NO AS 'LineInfo.ProdNo'

,L.ORD_QTY AS 'LineInfo.Qty'

,L.ITEM_PRICE AS 'LineInfo.ItemPrice'

FROM

dbo.OrderHeader AS H

JOIN dbo.OrderLine AS L

ON L.ORD_NO = H.ORD_NO

WHERE

H.ORD_NO = 'ORD_1021'

FOR JSON PATH

You will see the following JSON result.

[

{

"HeaderInfo":{

"Tag":"#ONLORD_12546_45634",

"OrderNo":"ORD_1021",

"CustNo":"CUS0001",

"OrderDate":"2016-06-04",

"OrderAmount":1200.0000,

"OrderStatus":1

},

"LineInfo":{"OrderNo":"ORD_1021","LineNo":1,"ProdNo":"P00025","Qty":3,"ItemPrice":200.0000}

},

{

"HeaderInfo":{

"Tag":"#ONLORD_12546_45634",

"OrderNo":"ORD_1021",

"CustNo":"CUS0001",

"OrderDate":"2016-06-04",

"OrderAmount":1200.0000,

"OrderStatus":1

},

"LineInfo":{"OrderNo":"ORD_1021","LineNo":1,"ProdNo":"P12548","Qty":2,"ItemPrice":300.0000}

}

]

OPENJSON

OPENJSON is a table value function which will go through a given JSON string, and returns a relational table with it’s contents. It’ll iterate through JSON object arrays, elemets and generates a row for each element. There are two variations of this functionality.

- Without a pre-defined schema where the values will be returned as key value pairs including it’s type to identify what sort of value is being returned.

- With a well defined schema. This schema will be provided by us in the OPENJSON statement.

OPENJSON without a pre-defined schema

We will use the following JSON data string to find out the types which will be returned based on the data type.

{

"Null Data":null,

"String Data":"Some String Data",

"Numeric Data": 1000.00,

"Boolean Data": true,

"Array Data":["A","B","C"],

"Object Data":{"SomeKey":"Some Value"}

}

DECLARE @vJSON AS NVARCHAR(4000) = N'{

"Null Data":null,

"String Data":"Some String Data",

"Numeric Data": 1000.00,

"Boolean Data": true,

"Array Data":["A","B","C"],

"Object Data":{"SomeKey":"Some Value"}

}';

SELECT * FROM OPENJSON(@vJSON)

With some realistic set of JSON data.

DECLARE @vJSON AS NVARCHAR(4000) = N'{

"Tag":"#ONLORD_12546_45634",

"OrderNo":"ORD_1021",

"CustNo":"CUS0001",

"OrderDate":"2016-06-04",

"OrderAmount":1200.0000,

"OrderStatus":1

}';

SELECT * FROM OPENJSON(@vJSON)

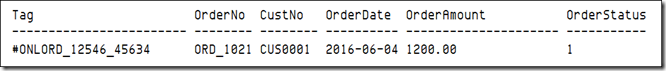

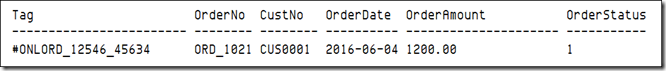

OPENJSON with a pre-defined schema

We will use the same JSON string which we have used in the previous example and generate the result set with a pre-defined schema.

DECLARE @vJSON AS NVARCHAR(4000) = N'{

"Tag":"#ONLORD_12546_45634",

"OrderNo":"ORD_1021",

"CustNo":"CUS0001",

"OrderDate":"2016-06-04",

"OrderAmount":1200.0000,

"OrderStatus":1

}';

SELECT * FROM OPENJSON(@vJSON) WITH(

Tag VARCHAR(24)

,OrderNo VARCHAR(8)

,CustNo VARCHAR(8)

,OrderDate DATE

,OrderAmount MONEY

,OrderStatus INT

)

This is basically what has been provided to support with JSON data in SQL 2016 natively. Hope this would be helpful for you.

![image_thumb[2] image_thumb[2]](https://lh3.googleusercontent.com/-n-fFIrbdqqw/Vp91GMn8jgI/AAAAAAAADmg/yqzhpO_Zz-k/image_thumb%25255B2%25255D_thumb%25255B6%25255D.png?imgmax=800)

![image_thumb[4] image_thumb[4]](https://lh3.googleusercontent.com/-rCHonaF_8ls/Vp91HvEHO1I/AAAAAAAADmw/KvL7ahgmw7Q/image_thumb%25255B4%25255D_thumb%25255B3%25255D.png?imgmax=800)